Project information

- Category: Deep Learning, Artificial Neural Networks, Computer Vision, Robotics, Edge Computing

- Project date: December, 2023

- GitHub: DriveBerry

Introduction

DriveBerry is an innovative autonomous vehicle project developed as part of the MSML642: Robotics class. This project combines cutting-edge technologies to create a miniature self-driving vehicle that demonstrates two crucial functionalities for autonomous vehicles: precise lane following and accurate signage detection. Key features include:

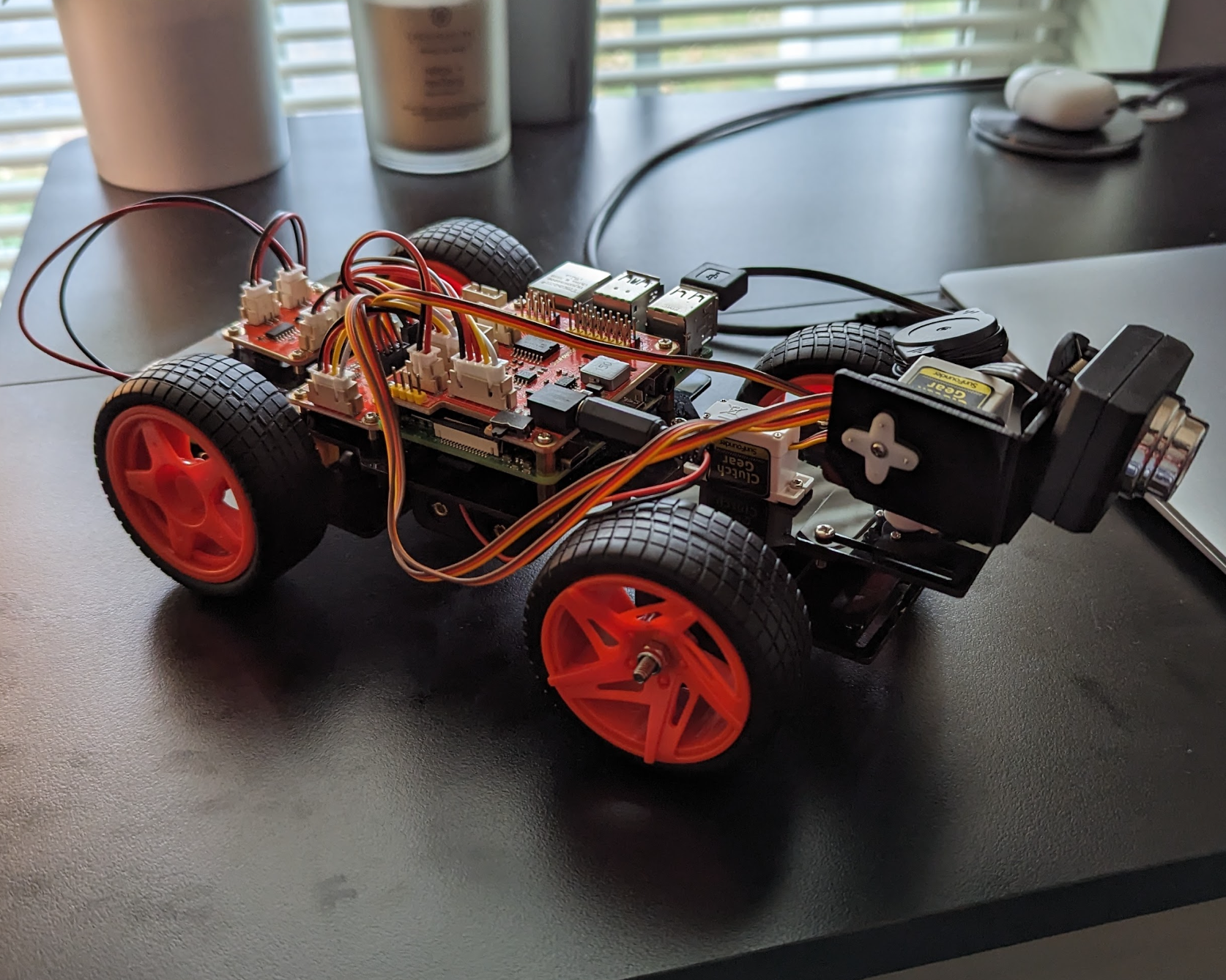

- Raspberry Pi-based control system with PiCar kit integration

- Google Edge TPU for efficient deep learning inference

- OpenCV-based lane following system with 100% accuracy

- CNN-based autonomous driving system with 83% accuracy

- Real-time traffic sign recognition with 100% accuracy

- 30-40 FPS performance on Edge TPU vs 1-2 FPS on CPU

The project demonstrates the practical application of machine learning and computer vision in robotics, overcoming various challenges in hardware assembly, software compatibility, and real-world implementation.

Objective

The primary objective of DriveBerry was to develop a miniature autonomous vehicle that could navigate lanes and detect traffic signs using machine learning techniques. The project aimed to:

- Implement and compare two distinct methods for lane navigation (OpenCV and CNN-based)

- Develop an efficient object detection system for traffic sign recognition

- Explore the practical implementation of computer vision and deep learning in robotics

- Optimize performance through edge computing solutions

Process

The process of building DriveBerry involved several key steps:

- Hardware Assembly: Integrated PiCar kit components, Raspberry Pi, Google Edge TPU, and camera system.

- Software Setup: Configured Debian-based Linux OS, installed necessary libraries (OpenCV, TensorFlow, PyCoral).

- Library Compatibility: Resolved version conflicts between OpenCV and TensorFlow, created custom environment with compatible dependencies.

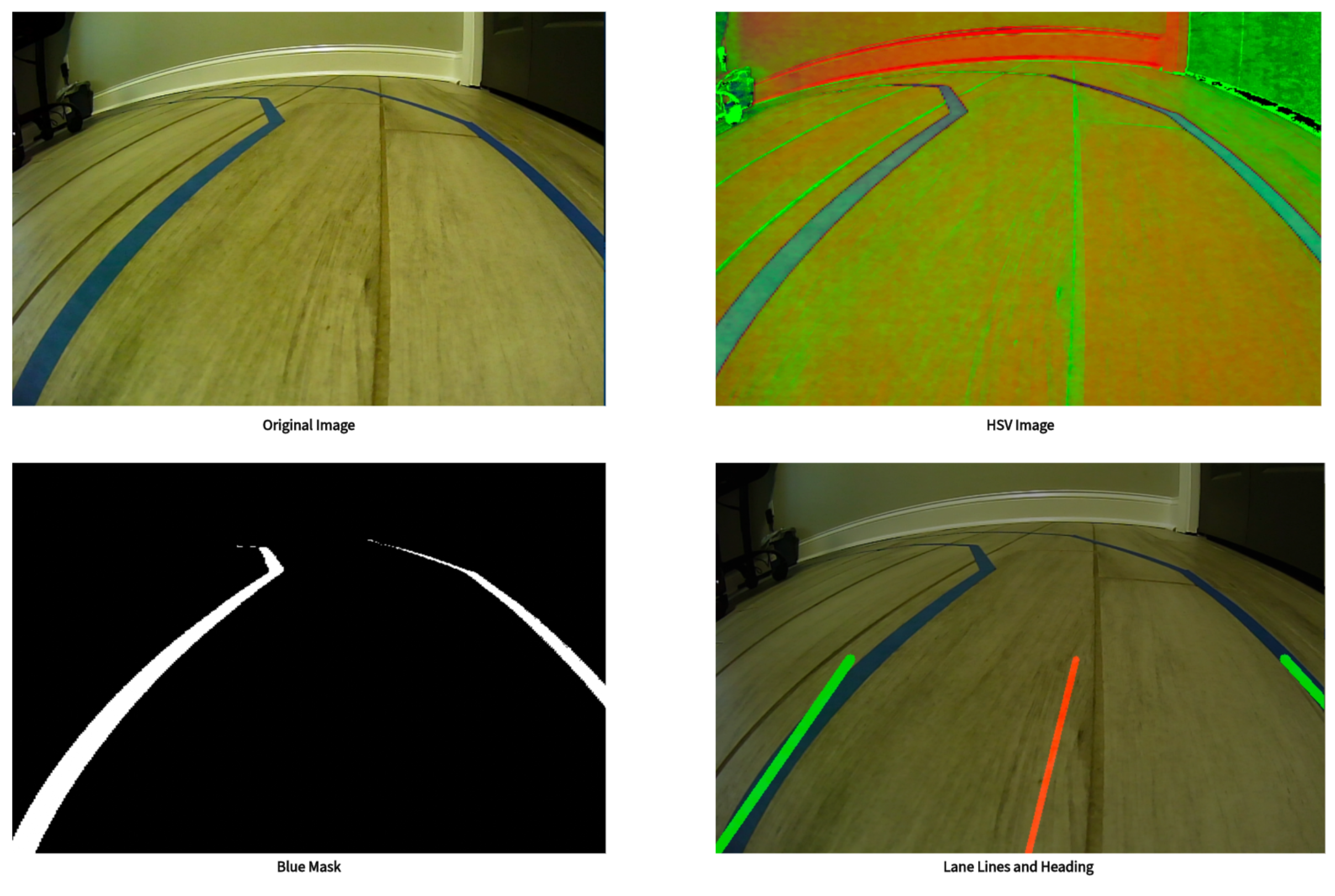

- Lane Navigation Development: Implemented OpenCV-based system with color thresholding and Hough Transform.

- Dataset Creation: Collected and processed images from lane navigation system to create a custom dataset for CNN training.

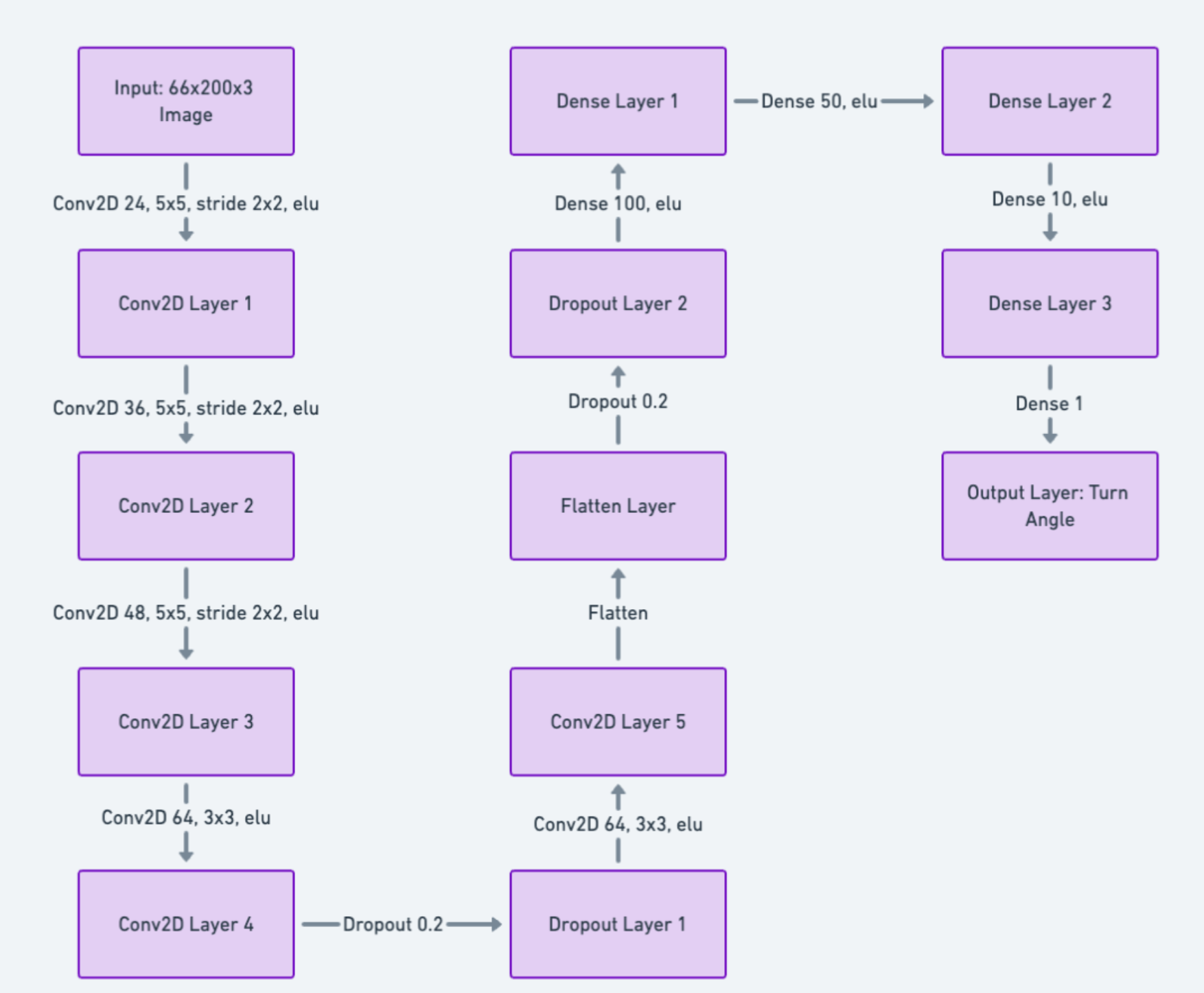

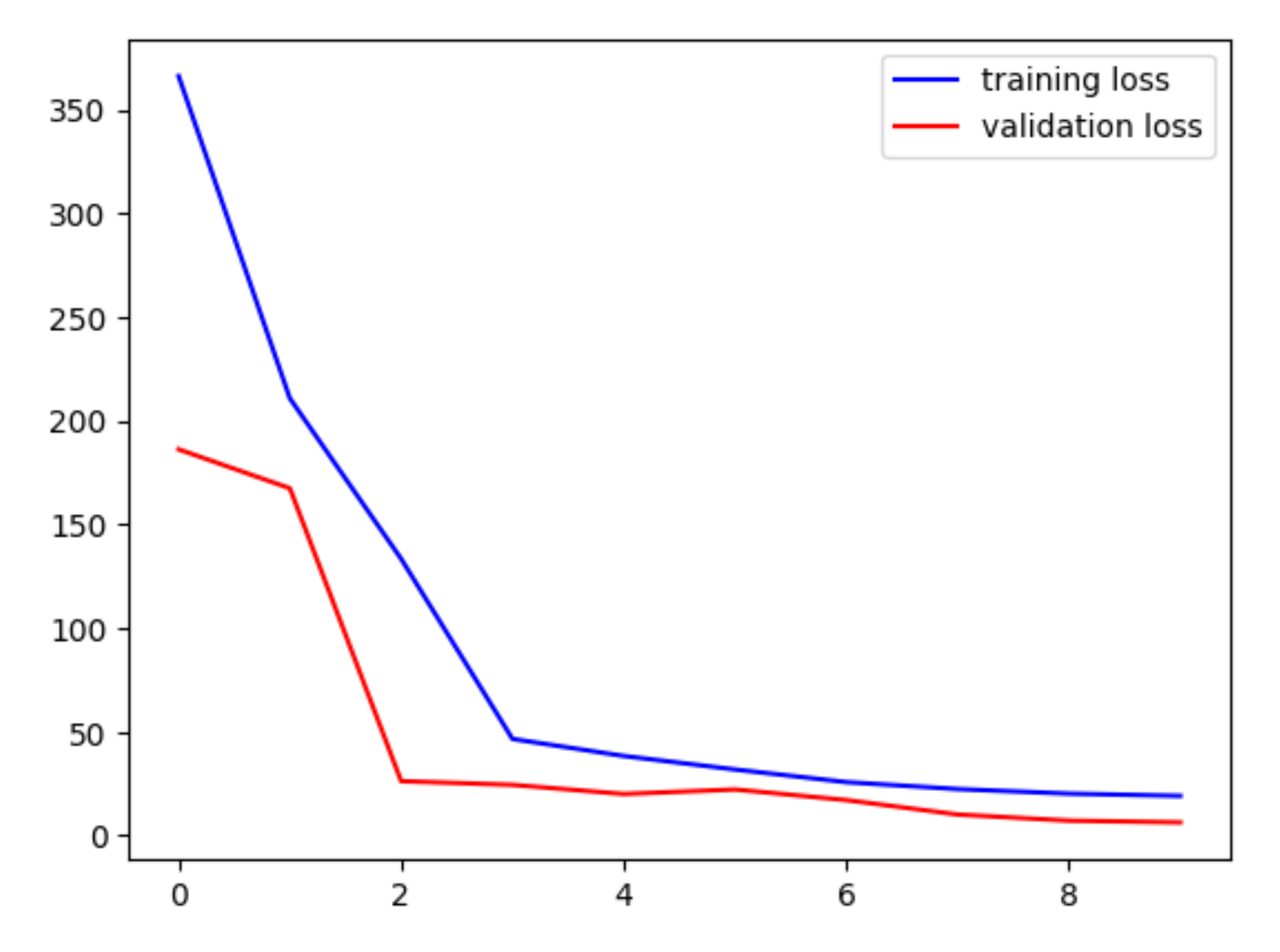

- CNN Model Development: Created custom CNN architecture with 5 convolutional layers and 3 dense layers.

- Model Evaluation: Achieved MSE of 8.8 and R-squared of 94.03% in model performance testing.

- Object Detection Setup: Integrated quantized SSD MobileNet V2 model for traffic sign recognition.

- Edge TPU Optimization: Implemented real-time detection achieving 30-40 FPS performance.

- Stop Sign Detection: Implemented specialized detection system with 100% accuracy.

- System Integration: Combined lane navigation and object detection systems.

- Testing and Validation: Conducted extensive testing under various lighting and road conditions.

- Performance Optimization: Fine-tuned parameters for optimal real-world performance.

- Documentation: Created comprehensive documentation for system architecture and implementation.

Tools and Technologies

The project utilized a comprehensive set of tools and technologies:

Hardware:

- PiCar Kit with 4 wheels, 2 motors, chassis, and 3 servos

- Raspberry Pi 3B+ as central processing unit

- Google Edge TPU for deep learning inference

- USB camera for video capture

- Rechargeable battery pack

Software:

- Debian-based Linux OS

- Python 3.x with Conda environment

- OpenCV for image processing and lane detection

- TensorFlow/TFLite for model development

- PyCoral for Edge TPU integration

- PiCar library for vehicle control

- Matplotlib for visualization

Machine Learning Models:

- Custom CNN for autonomous driving (66x200x3 input)

- Quantized SSD MobileNet V2 for object detection

- OpenCV-based lane detection system

Advancing Robotics with Machine Learning

DriveBerry represents a significant step forward in applying machine learning to robotics. By combining traditional computer vision techniques with deep learning and edge computing, the project demonstrates how modern AI can be effectively deployed in real-world applications.

The project successfully demonstrates the practical application of machine learning and computer vision in robotics, overcoming various challenges in hardware assembly, software compatibility, and real-world implementation. The integration of edge computing through Google Edge TPU significantly improved performance, making the system suitable for real-time autonomous navigation tasks. This approach can be adapted for various autonomous systems, from industrial robots to smart home devices.